MongoDB Aggregation Pipeline Patterns Part 1

Today we are going to explore different ways of transforming raw data efficiently. I'll be going over a couple of patterns that will make it easier for you to calculate everything you need in a single MongoDB aggregation pipeline query. Consider the following data set for these examples: ``` //...

Today we are going to explore different ways of transforming raw data efficiently. I'll be going over a couple of patterns that will make it easier for you to calculate everything you need in a single MongoDB aggregation pipeline query.

Consider the following data set for these examples:

``` // Sales Collection [ {sales: 10, week: 1, year: 2016, source: 'retail'}, {sales: 12, week: 2, year: 2016, source: 'retail'}, {sales: 14, week: 3, year: 2016, source: 'retail'}, {sales: 16, week: 4, year: 2016, source: 'retail'}, {sales: 1, week: 1, year: 2016, source: 'online'}, {sales: 2, week: 2, year: 2016, source: 'online'}, {sales: 4, week: 3, year: 2016, source: 'online'}, {sales: 6, week: 4, year: 2016, source: 'online'}, {sales: 9, week: 1, year: 2015, source: 'retail'}, {sales: 11, week: 2, year: 2015, source: 'retail'}, {sales: 13, week: 3, year: 2015, source: 'retail'}, {sales: 15, week: 4, year: 2015, source: 'retail'}, {sales: 0, week: 1, year: 2015, source: 'online'}, {sales: 1, week: 2, year: 2015, source: 'online'}, {sales: 2, week: 3, year: 2015, source: 'online'}, {sales: 3, week: 4, year: 2015, source: 'online'},

{sales: 10, week: 1, year: 2016, source: 'retail'}, {sales: 12, week: 2, year: 2016, source: 'retail'}, {sales: 14, week: 3, year: 2016, source: 'retail'}, {sales: 16, week: 4, year: 2016, source: 'retail'}, {sales: 1, week: 1, year: 2016, source: 'online'}, {sales: 2, week: 2, year: 2016, source: 'online'}, {sales: 4, week: 3, year: 2016, source: 'online'}, {sales: 6, week: 4, year: 2016, source: 'online'}, {sales: 9, week: 1, year: 2015, source: 'retail'}, {sales: 11, week: 2, year: 2015, source: 'retail'}, {sales: 13, week: 3, year: 2015, source: 'retail'}, {sales: 15, week: 4, year: 2015, source: 'retail'}, {sales: 0, week: 1, year: 2015, source: 'online'}, {sales: 1, week: 2, year: 2015, source: 'online'}, {sales: 2, week: 3, year: 2015, source: 'online'}, {sales: 3, week: 4, year: 2015, source: 'online'}, ] ```

Split Concerns

This method is great for setting up data before doing larger calculations.

// Pipeline instructions const pipeline = \[ {$group: { \_id: {week: '$week', source: '$source'}, sales: {$sum: {$cond: \[{$eq: \['$year', 2016\]},'$sales',0\]}}, salesLastYear: {$sum: {$cond: \[{$eq: \['$year', 2015\]},'\$sales',0\]}}, }}, \]

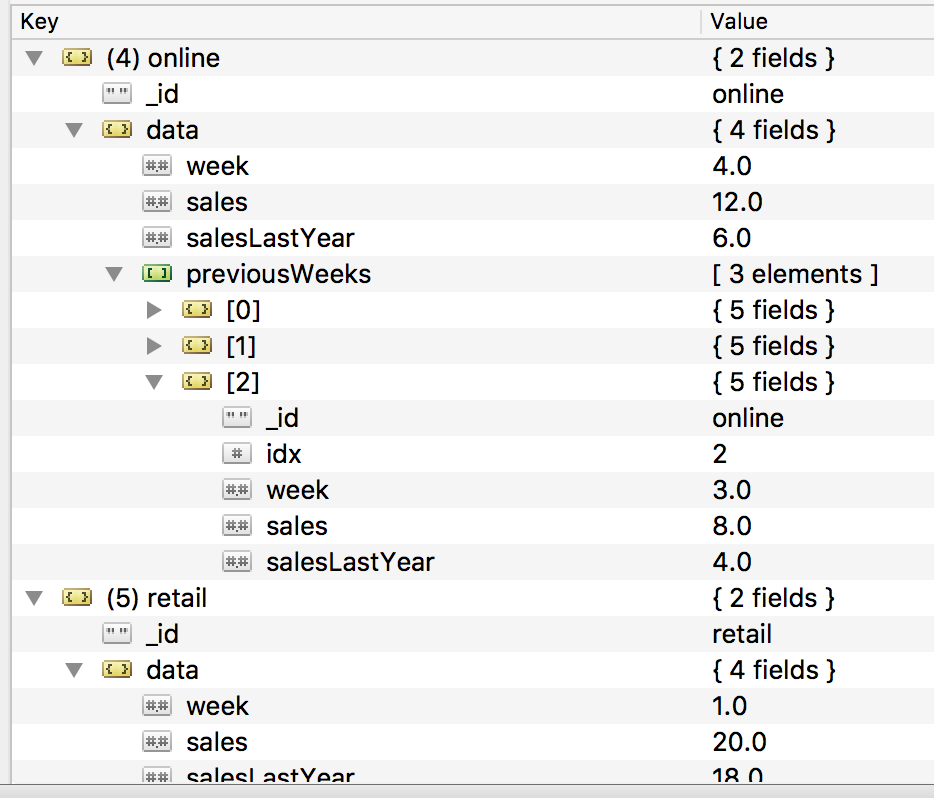

In this example we are grouping by week and source and doing a \$sum of sales. We are combining last year's sales with this year's sales.

This will make your pipeline easier to understand. You should always wait until the last step to actually calculate the results you want to see. Everything you are doing before that is to set up the variables you need to make those calculations.

Unwind to Index

This method is great for indexing data and can be useful when order matters.

// Pipeline instructions const pipeline = \[ {$group: { \_id: {week: '$week', source: '$source'}, sales: {$sum: {$cond: \[{$eq: \['$year', 2016\]},'$sales',0\]}}, salesLastYear: {$sum: {$cond: \[{$eq: \['$year', 2015\]},'$sales',0\]}}, }}, {$sort: {'\_id.week': 1}}, {$group: { \_id: '$\_id.source', d: {$push: '$$ROOT'}, }}, {$unwind: { path: '\$d', includeArrayIndex: 'idx', }}, \]

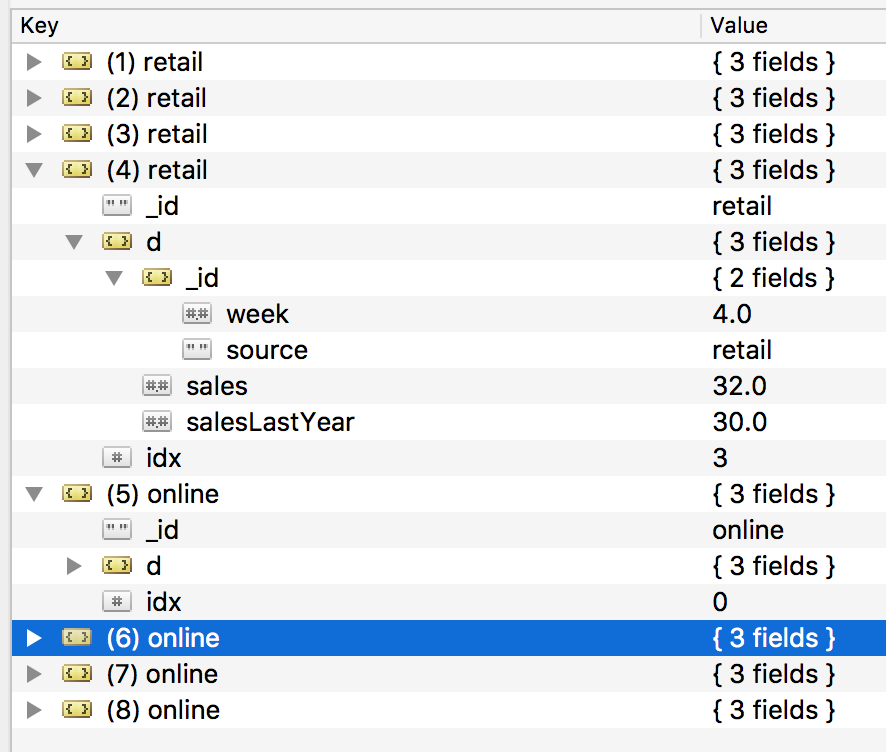

Notice how we sort the result by week number. Then we group by source to push all of the weeks into an ordered array. Now that we have the data of each source in ordered weeks we \$unwind but include an index by using includeArrayIndex. With this, we know the exact position of the week inside the array but have a normalized mongo object.

The result looks like this:

Group to Map and Filter

This method is great for remapping data.

Suppose we need historical data to be mapped into each week for each source. We would need to do this.

``` // Pipeline instructions const pipeline = [ {$group: { _id: {week: '$week', source: '$source'}, sales: {$sum: {$cond: [{$eq: ['$year', 2016]},'$sales',0]}}, salesLastYear: {$sum: {$cond: [{$eq: ['$year', 2015]},'$sales',0]}}, }}, {$sort: {'_id.week': 1}}, {$group: { _id: '$_id.source', d: {$push: '$$ROOT'}, }}, {$unwind: { path: '\$d', includeArrayIndex: 'idx', }},

// New Part {$project: { _id: 1, idx: 1, week: '$d._id.week', sales: '$d.sales', salesLastYear: '$d.salesLastYear', }}, {$group: { _id: '$_id', data: {$push: '$$ROOT'}, }}, {$project: { _id: 1, data: { $map: { input: '$data', as: 'datum', in: { week: '$$datum.week', sales: '$$datum.sales', salesLastYear: '\$$datum.salesLastYear', previousWeeks: {$filter: { input: '$data', as: 'filterDatum', cond: {$lt: ['$$filterDatum.idx', '$$datum.idx']}, }}, }, }, }, }}, {$unwind: '$data'}, ] ```

First we $project to create an object that's easier to work with. Next we $group all of that data into each of the sources so we can have it all together. Now we can use $map and $filter to look through each row of data and attach all of the previous weeks by using the index we calculated with the previous technique.

The $map function takes in 3 commands input, as, and in. input will set which array in the current document you want to map, as sets the name of each element in the array, and in is where the actual work happens, $map will return a new version of the previous array.

The \$filter function takes in 3 commands as well input, as, and cond. input will set which array in the current document you want to filter, as sets the name of each element in the array, and cond is the condition that needs to return true to include in the new array. This function returns a smaller array of the previous array.

The result of this operation is this: